On May 30, 2025, Youngah, our Lab Principal Investigator, delivered a compelling keynote at the Media For All 2025 conference at The University of Hong Kong. Titled “Empowering Cultural Preservation and Inclusivity Through Technology: Innovations in Hong Kong Sign Language”, the address showcased our lab’s pioneering efforts to preserve Hong Kong Sign Language (HKSL) and promote inclusivity for the Deaf community.

Preserving HKSL’s Cultural Heritage

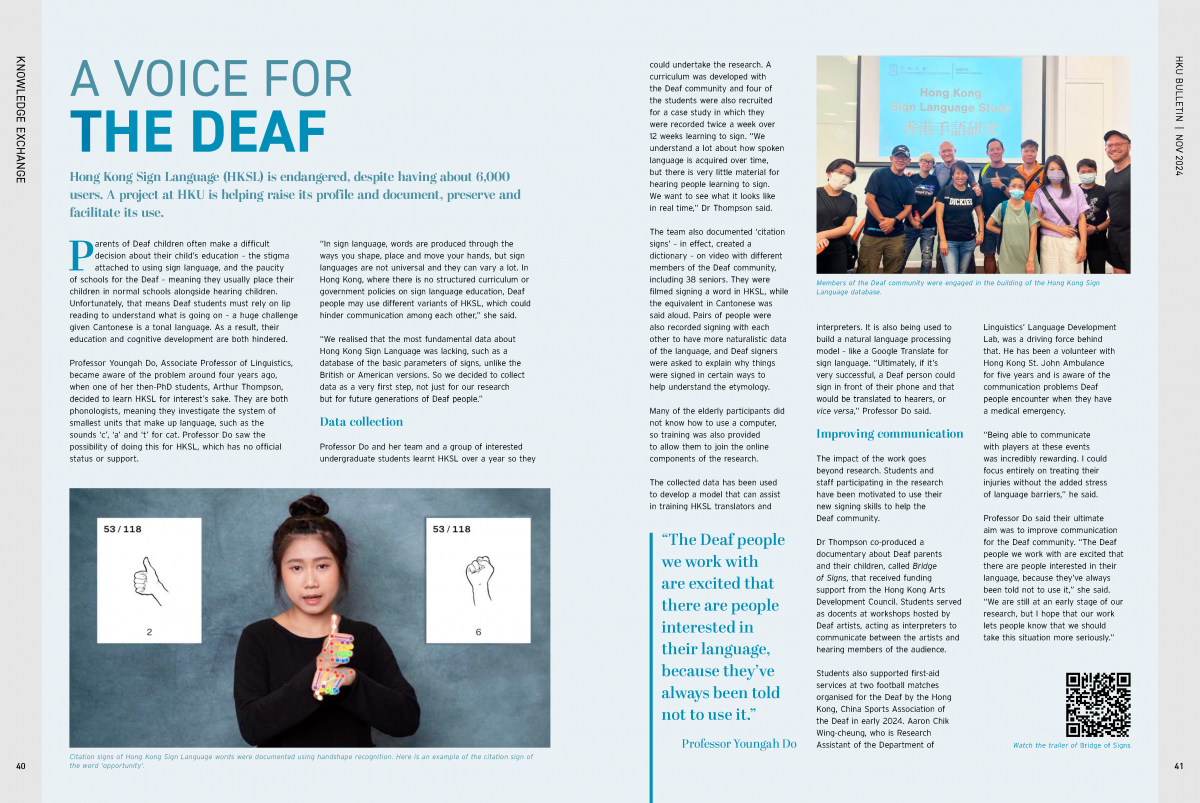

Our research focuses on safeguarding the linguistic and cultural richness of HKSL. Through meticulous documentation and archiving of HKSL signs, narratives, and dialogues, we are building a lasting repository to ensure this vital aspect of Hong Kong’s heritage endures. These efforts provide a foundation for cultural preservation, enabling future generations to engage with and learn from the Deaf community’s unique linguistic identity.

Breakthroughs in Sign Language Technology

Central to our work is an innovative HKSL handshape detection model, which leverages advanced machine learning to enhance the accuracy and speed of sign language recognition. This technology marks a significant leap forward in interpreting HKSL, enabling seamless communication. Key applications include:

- A comprehensive HKSL curriculum designed for hearing learners, making the language accessible to a broader audience and fostering cross-community understanding.

- Practical tools, such as real-time sign language interpretation for paramedic services, ensuring effective communication during emergencies, and art exhibition accessibility, enriching cultural participation for Deaf individuals.

Building Bridges Between Communities

Our work goes beyond technology—it’s about building unity. By developing tools that facilitate communication, we aim to create a deeper connection between the Deaf and hearing communities. These efforts promote a society that celebrates diversity, embraces cultural heritage, and ensures inclusivity for all.

Youngah’s keynote resonated with attendees, sparking conversations about the role of technology in social good. The Media For All 2025 conference provided an ideal platform to share our vision, and we’re excited to continue this journey toward a more inclusive future.

Looking Ahead

The advancements shared in the keynote are just the beginning. Our team remains dedicated to pushing the boundaries of HKSL research and its applications. We invite collaborators, community partners, and stakeholders to join us in this mission to preserve HKSL and empower the Deaf community.

For more information about our work or to explore potential partnerships, please contact our lab through the Knowledge Exchange Office at The University of Hong Kong. Together, we can create a more inclusive and culturally rich society.