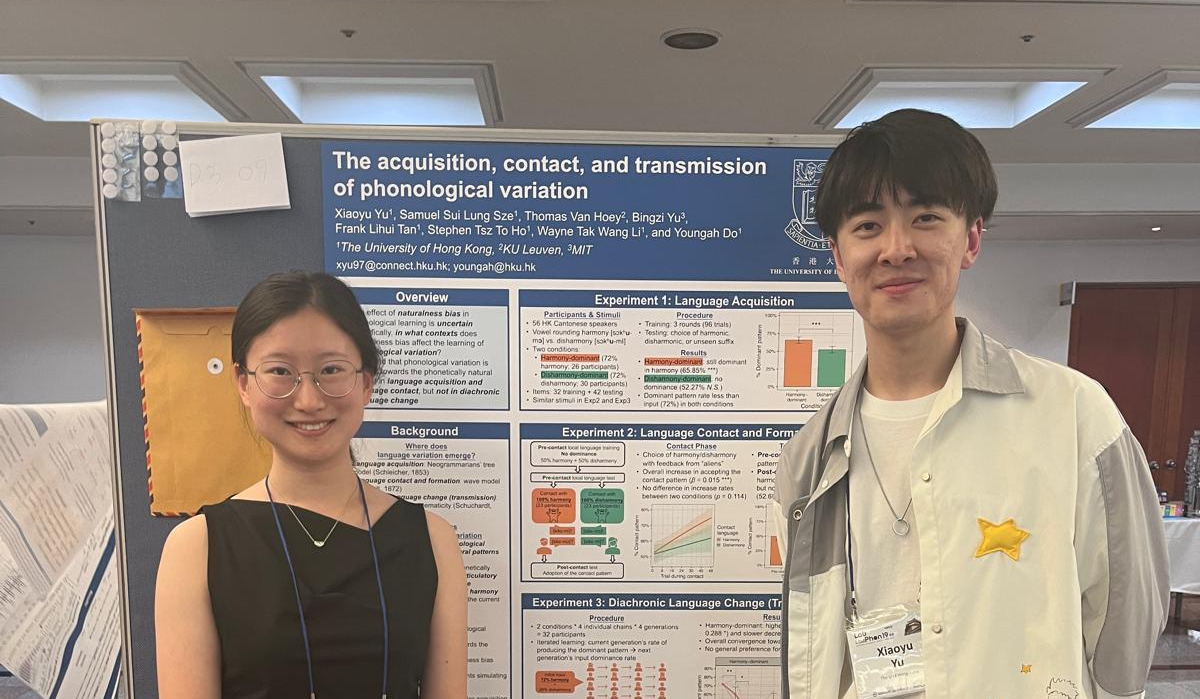

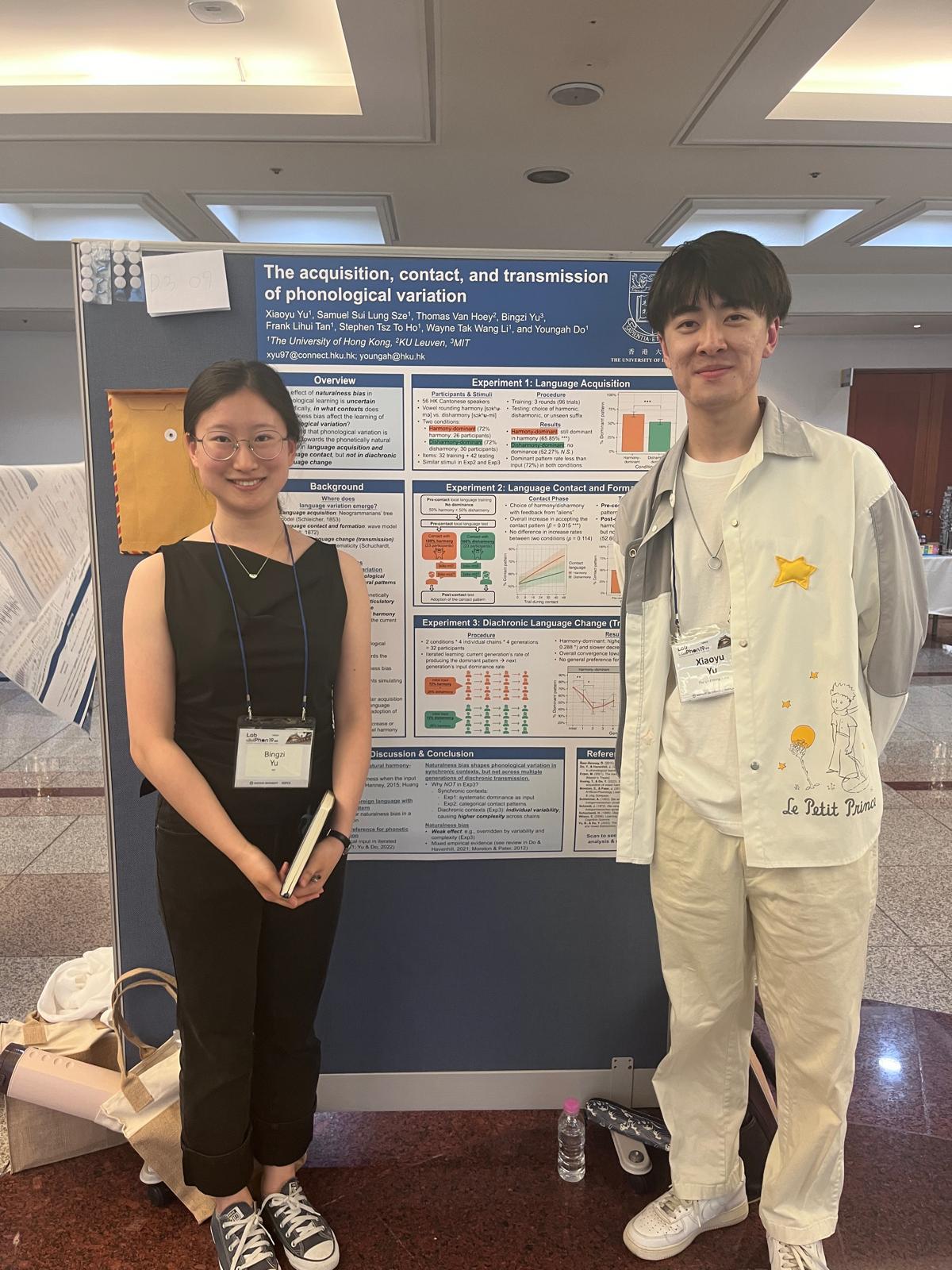

Our lab was well represented at the Annual Meeting on Phonology (AMP 2025) at UC Berkeley. Ivy, Frank, and Youngah not only enjoyed a fun Waymo experience, but also presented their research as below:

Youngah, along with scholars from Harvard University, presented a paper titled “Investigating the Tone-Segment Asymmetry in Phonological Counting: A Learnability Experiment.” Scholars involved in this research were Jian Cui, Hanna Shine, Jesse Snedeker.

Frank, Ivy, and Youngah presented a talk titled “Modeling Prosodic Development with Prenatal Audio Attenuation.”

Additionally, Youngah participated in a keynote panel discussion on “Future Directions in Deep Phonology” with other scholars, including Volya Kapatsinski, Joe Pater, Mike Hammond, Jason Shaw, and Huteng Dai.

Overall, AMP 2025 was a rewarding and excellent opportunity for our team to engage in deep intellectual conversations with leading experts in phonology, fostering new ideas and collaborations that will propel our research forward.